Color Overview

- If you have book access, this is mostly from chapter 1.

- Biology and physics dictates the colors we see.

- I am not going into that .

- We can do colors two ways

- Much of art uses a subtractive color system.

- You start with a white surface

- You add pigments that "remove" the light that is reflected.

- In this system, cyan, yellow and magenta are usually the primary colors.

- In most computer systems we use an addative color system.

- We start with a black screen

- Then add components of light to increase what is emitted.

- In this system, red, green and blue are the primary colors.

- What?

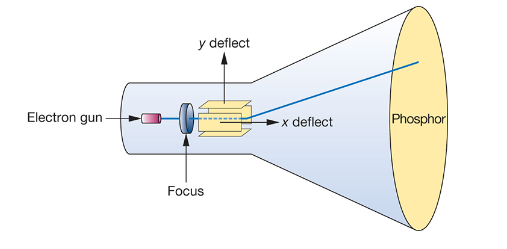

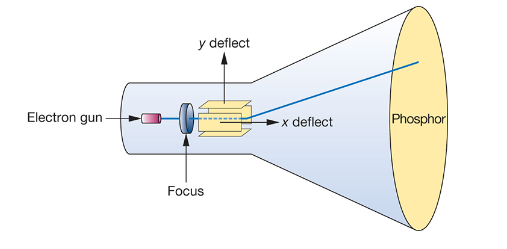

- Originally a monitor was a glass plate coated with phosphor

- An electron beam would "strike" the plate and the phosphor would "glow" for a short period of time.

- The beam was controlled by a "gun", which would sweep across the screen

-

- the gun would shoot through a "grid" which would discretize the output.

- The light would only glow for a short period of time, and needed to be "refreshed", or another pass over the screen.

- So the image would need to be "stored" in memory.

- This was called the frame buffer

- The frame buffer was just some memory to store if a pixel was on or off.

- The gun would "fire" if the pixel were on, and not fire if the pixel were off.

- Thus a 600x500 display would need 300,000 bits to store the image.

- or 36KB (bytes)

- Since the gun would move from left to right, top to bottom, the tradition became to make the upper left of the screen 0,0

- At some point they decided to fire the gun at different intensities.

- Somewhere between 0 and 100%.

- With the intensity represented by an integer.

- Usually a power of 2, so the numbers worked nice with memory.

- An 8 bit gray scale display would display colors between 0 and 255.

- Remember, only one coat of phosphor

- But we now need 8 bits per pixel.

- 600x500x8 = 2,400,000 bits or 292KB.

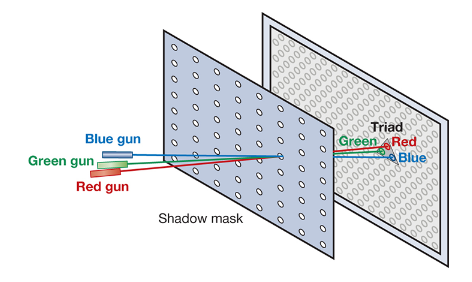

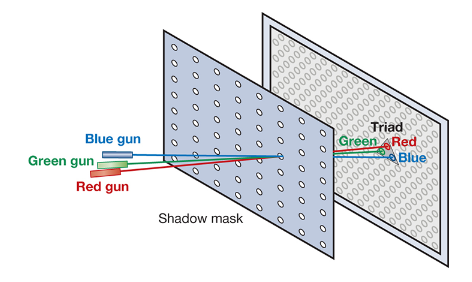

- So why not add more phosphor

-

- That way we could fire three different guns and get different colors.

- But now we need 8x3 bits per pixel.

- 4.5 MB memory.

- While modern technology has changed

- The coordinate system is still here.

- We still think in terms of pixels.

- We still think in terms of a frame buffer, on a chunk of memory storing information about how we will "light" the pixels.

- But we often augment this with additional information.

- depth, alpha value, ...

- Ok but ...

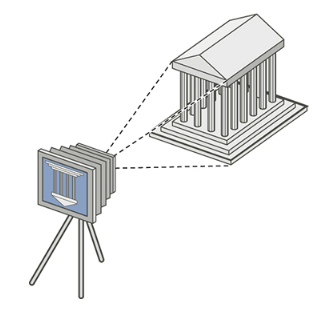

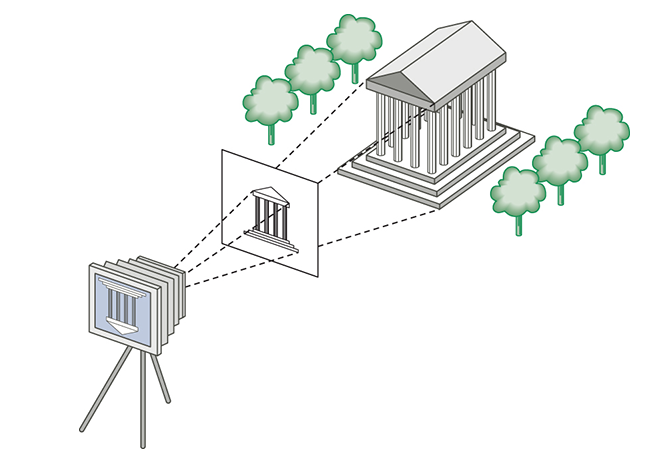

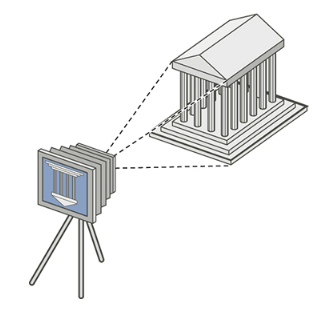

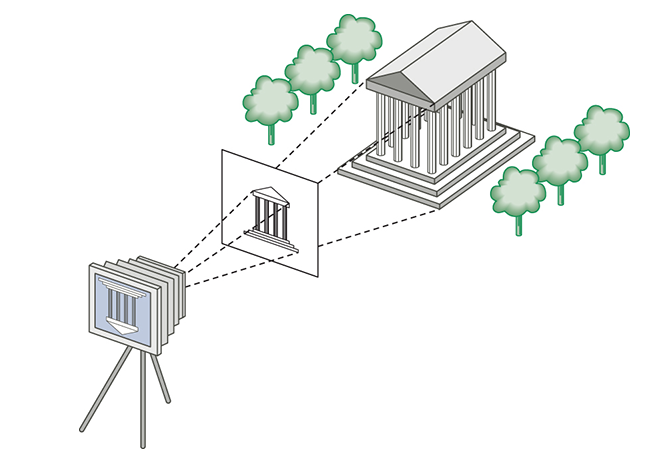

- In graphics, we generally view the word through a "synthetic camera"

-

- Light strikes the images in the scene and travels through the "lense" of the camera to strike a "photographic plate" in the back of the camera.

- The intensity and color of the light determines what is recorded on this photographic plate.

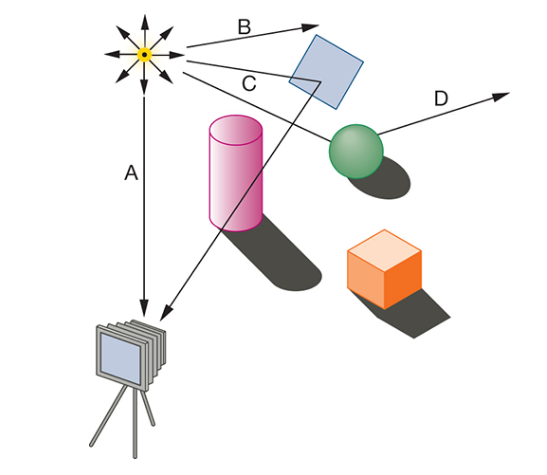

- We frequently reduce light to be a point source or assume that light from a single source is emitted from a single point in all directions.

- This is a simplifying assumption.

- Light sources are usually bigger. (think wire in a light bulb, or the sun)

- But it makes our life more simple.

- So a model

- We generally have objects and light sources in a scene

- Both have properties

- Common properties like location.

- Lights have properties like color of light emitted.

- Objects have properties like the color of light reflected and amount.

- The viewer, or camera is also important

- Location, orientation, ...

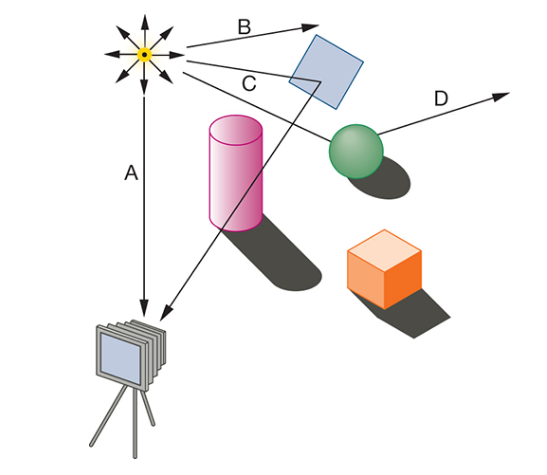

- In our model

- Light from a light source

- Strikes the viewer

- Strikes an object

- Travels off into space.

- If it strikes the viewer, it is added to the "image"

- If it travels off into infinity, it is discarded.

- If it strikes an object it is

- Absorbed by the object.

- Reflected off the object with altered properties.

- Transmitted through the object with altered properties.

- Absorbed light is ignored.

- Reflected and transmitted light, see light source.

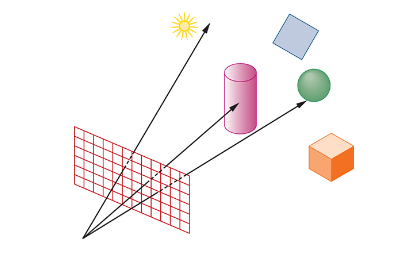

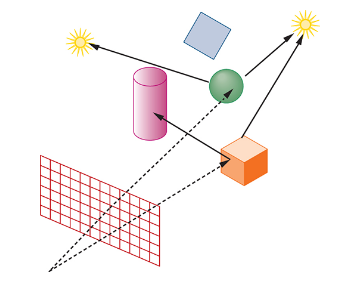

-

- There is just one problem with this model...

- There are infinitely may light rays at each source.

- And this can be multiplied by reflected and refracted light at a given point.

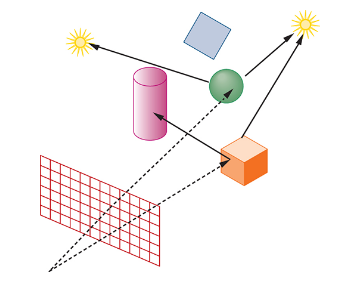

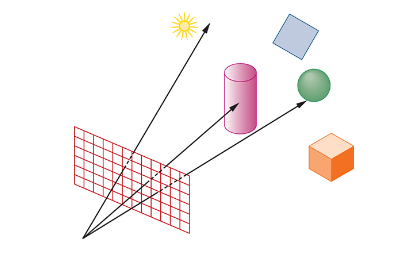

- A solution is reverse the situation, Ray-casting.

-

- Instead of tracing light from the source, trace it from the destination.

- A ray can

- Travel to infinity, pixel is black.

- Hit a light source, pixel is the color of the light source.

- Hit an object.

- When a ray hits an object, we need to compute the color of the object at that point.

- From the point, cast a ray to each light source.

- If it hits, add the color of that light source as it interacts with the material at that point.

- If it hits another object first, recursive case.

- If the object is transparent or translucent

- Generate rays passing through the object as well.

-

- We will actually end up doing something different from this for quite a while.

- We will do a "cheap" light computation on the objects.

- We will then "project" the objects to the photographic plate

-

- So back to colors

- We will use RGB as our primary color system.

- We are dealing with adding light after all.

- We will assume that lights have RGB properties.

- A light turned off has rgb values of (0,0,0)

- A light with all three values at full has a value of (1,1,2)

- We might end up scaling these between 0 and N

- Look

RGB (Red, Green, Blue)