<

Cache

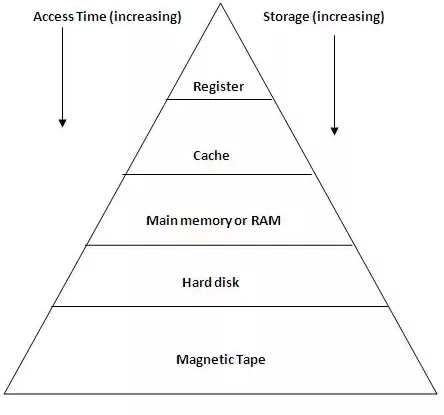

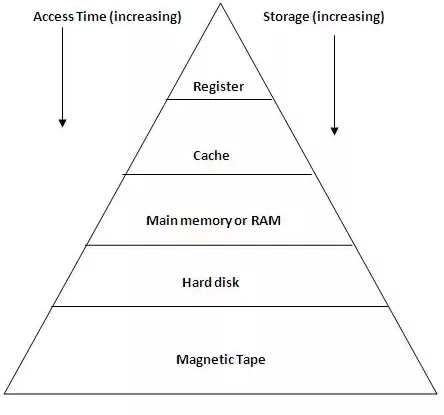

- Cache is a critical component of the memory hierarchy.

-

- Some don't include registers in this.

- But in general, as you go down the triangle

- Size increases

- Cost decreases

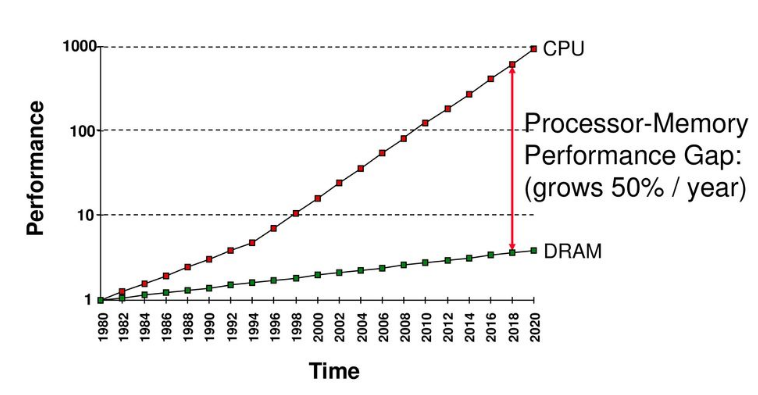

- The memory gap is the problem

- Cache could actually have multiple slices in this pyramid

- Layer 1 cache

- Layer 2 cache

- ...

- Cache works on two principles

- The principle of spatial locality

- If a data location is referenced, data locations near that location are likely to be accessed.

- The principle of temporal locality

- If a data item is referenced, it is likely to be referenced again.

- A block of cache is a set of data , also known as a line

- A cache hit or hit means that an item is in cache, a cache miss or miss, means that it is not.

- The hit rate is the percent of time a requested item is in cache

- The miss rate = 1-hit rate

- The hit time is the time it takes to determine if an item is in cache and if so return it.

- The miss penalty is the time it takes to retrieve an item that is not in the cache.

- There are three types of cache misses

- a compulsory miss occurs when the item can not ever have been in cache

- a capacity miss occurs when there is not space in cache for an item

- a conflict miss occurs when two data items wish to occupy the same cache space. (Collision)

- There are two main types of cache

- Direct mapped cache maps each memory location to a exactly one location

- Think assigned parking spaces.

- If we have a cache size of 8 lines

- There are 8 places to put data (3 bits to address)

- Assume each block is 4 words.

- We put 4 pieces of data in each line (2 bits to address)

- We will then need to take an address and map it to a cache location.

- See picture page 396

- The cache size is 8*4*wordsize bits.

- The bottom two bits are used for word level addresses

- The next two bits are used to identify which word of the block

- The next three bits are used to identify which block of the cache (or index)

- The remaining 32 - (2+2+3) = 25 bits are used as a tag to identify what data is in the cache.

- In addition a valid bit is used to tell if the cache entry is valid or not.

- Finally a dirty bit is sometimes used to tell if the cache entry has been written back to memory or not.

- This can lead to collisions even when some cache locations are not used.

- Fully associative cache allows a block to be placed anywhere in cache.

- There is no index, all of cache must be searched.

- But there will never be a conflict miss.

- A cache replacement policy is needed.

- When a capacity miss occurs, what entry is removed

- Last needed: great but we can't do this.

- LRU - Least Recently Used

- Random - is about as good.

- N way set associative combines these.